“Teaching with Large Language Models” Workshop

On August 22, 2023, bioCEED, in collaboration with SLATE (UiB’s Centre for the Science of Learning & Technology), hosted a full-day workshop, bringing together 19 instructors from across all departments in the faculty of Mathematics and Natural Sciences. Our primary goals were to share experiences with large language models (LLMs) like chatGPT, explore the opportunities and challenges these tools present for teaching and learning, and brainstorm innovative methods of integrating LLMs into our courses to support student learning. This event involved both small cross-disciplinary group and full-group discussions led by Anne Bjune, Ståle Ellingsen, and Sehoya Cotner (all from Biological Sciences), and David Grellscheid (Informatics). Raquel Coelho, associate researcher at SLATE, also joined the workshop to conduct research on the instructors’ experiences with LLMs during the Fall semester.

Prior to working in small groups to discuss the challenges and opportunities of existing LLMs, David Grellscheid explained why he doesn’t like the “intelligence” part of the term “Artificial Intelligence.” He emphasized that while the tool might appear intelligent, humans are the true interpreters, as they derive meaning from its outputs.

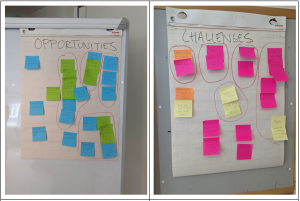

Participants worked in groups to develop lists of opportunities and challenges associated with LLM use.

While most participants have used one or more of these tools, few have incorporated them into their teaching. Thus, we had some discussion about the potential for educational use of LLMs. Specifically, current versions of chatGPT, Bard, Bing, etc. are capable of summarizing content, creating outlines, acting as a personal tutor and giving feedback, suggesting coding solutions, and brainstorming. We also considered emerging challenges associated with LLMs, such as a) crafting effective prompts, b) ensuring equity (given that not every student has equal access to these tools), c) addressing potential biases, d) considering cheating concerns, and e) ensuring the model’s output accuracy.

Our final small-group task was to collaborate on developing individual implementation plans for using an LLM in our teaching this semester. We collected all these plans and Raquel is in weekly contact with participants to learn more about how things work out. We will get back together in early December to share experiences and plan next steps for this group. Further updates are on the horizon!